I’ve been tinkering with web analytics, and I want to share how I built this mini JS SDK. It’s got event tracking, batching with retries, and IndexedDB persistence—no frameworks, just pure JS. Let’s jump in.

By the end, we’ll have a foundation that can be extended further.

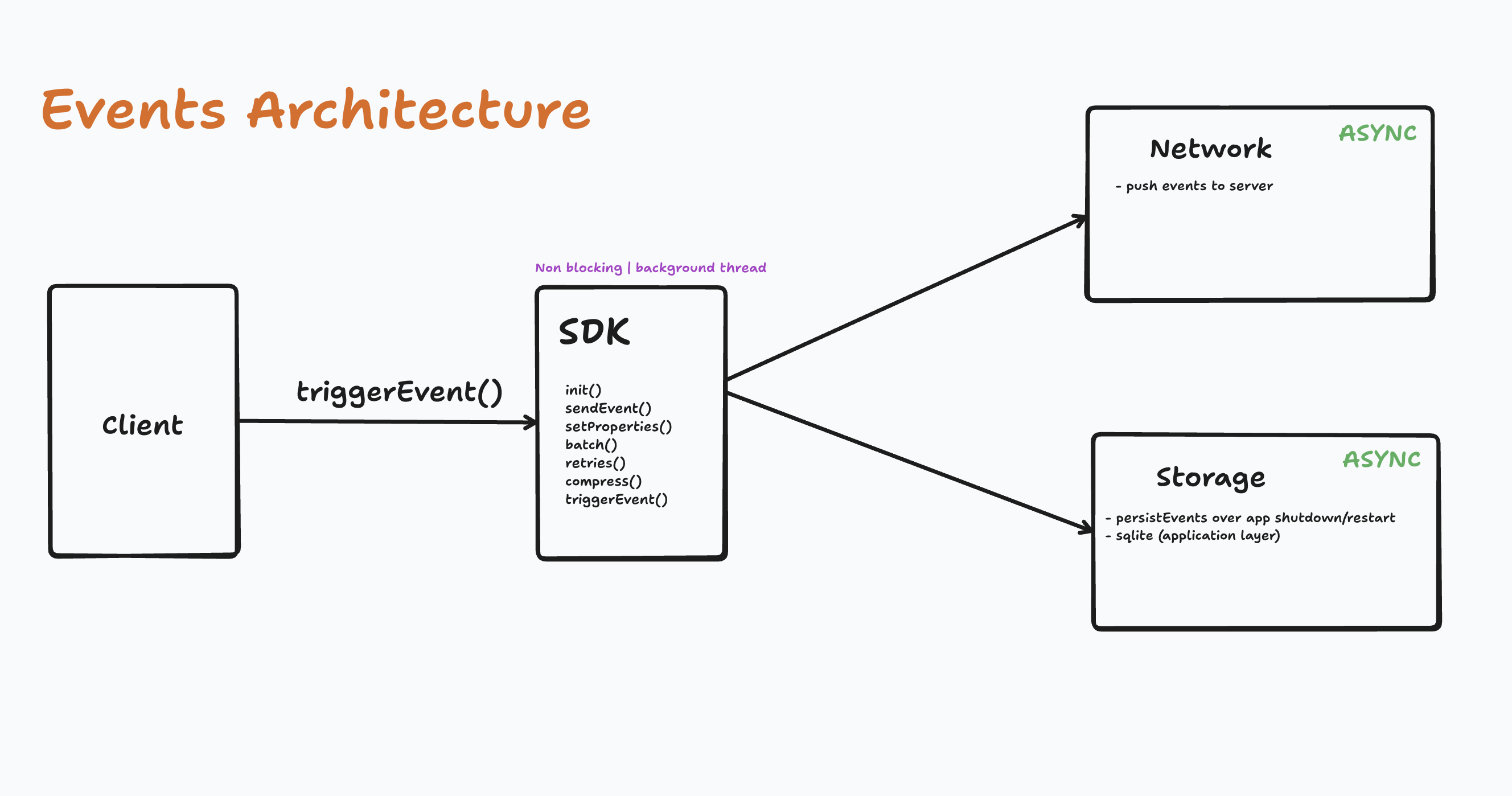

Here’s the rough architecture diagram:

I started with a simple in-memory queue to hold events. The AnalyticsSDK class takes an endpoint and batch size (default 5). When tracking an event, I create an object with name, properties, and timestamp, then push it to the queue.

class AnalyticsSDK {

constructor({ endpoint, batchSize = 5 }) {

this.endpoint = endpoint;

this.batchSize = batchSize;

this.queue = [];

}

track(eventName, properties = {}) {

const event = {

name: eventName,

properties,

timestamp: Date.now(),

};

this.queue.push(event);

}

}

Adding Some Smarts: Batching and Sending It Out To cut network calls, I batch events, compress them, and send via POST. Compression via CompressionStream API shrinks payloads by 60-80%.

async flush() {

if (this.queue.length === 0) return;

const batch = this.queue.splice(0, this.batchSize);

const json = JSON.stringify(batch);

const compressed = await compressData(json);

await fetch(this.endpoint, {

method: "POST",

headers: {

"Content-Encoding": "gzip", // tell server it’s compressed

"Content-Type": "application/json",

},

body: compressed,

});

}

// Utility for compression

async function compressData(data) {

const encoder = new TextEncoder();

const stream = new Blob([encoder.encode(data)])

.stream()

.pipeThrough(new CompressionStream("gzip"));

const chunks = [];

const reader = stream.getReader();

while (true) {

const { done, value } = await reader.read();

if (done) break;

chunks.push(value);

}

return new Blob(chunks);

}

Handling the Inevitable: Retries with Backoff For resilience, I added retries with exponential backoff—up to 3 tries, starting with 1-second delays.

async flushWithRetry(retries = 3, delay = 1000) {

for (let i = 0; i < retries; i++) {

try {

await this.flush();

return;

} catch {

await new Promise((res) => setTimeout(res, delay * (i + 1)));

}

}

}

Saves data during network flakiness

Bringing in the Big Guns: IndexedDB for Persistence For offline persistence, I used IndexedDB—async, large capacity, non-blocking. Created an EventStorage class for save, getAll, and clear.

class EventStorage {

constructor(dbName = "AnalyticsDB", storeName = "events") {

this.dbName = dbName;

this.storeName = storeName;

this.dbPromise = this.init();

}

async init() {

return new Promise((resolve, reject) => {

const req = indexedDB.open(this.dbName, 1);

req.onupgradeneeded = (e) => {

const db = e.target.result;

if (!db.objectStoreNames.contains(this.storeName)) {

db.createObjectStore(this.storeName, { autoIncrement: true });

}

};

req.onsuccess = (e) => resolve(e.target.result);

req.onerror = (e) => reject(e.target.error);

});

}

async save(event) {

const db = await this.dbPromise;

return new Promise((resolve, reject) => {

const tx = db.transaction(this.storeName, "readwrite");

tx.objectStore(this.storeName).add(event);

tx.oncomplete = () => resolve();

tx.onerror = (e) => reject(e.target.error);

});

}

async getAll() {

const db = await this.dbPromise;

return new Promise((resolve, reject) => {

const tx = db.transaction(this.storeName, "readonly");

const req = tx.objectStore(this.storeName).getAll();

req.onsuccess = (e) => resolve(e.target.result);

req.onerror = (e) => reject(e.target.error);

});

}

async clear() {

const db = await this.dbPromise;

return new Promise((resolve, reject) => {

const tx = db.transaction(this.storeName, "readwrite");

tx.objectStore(this.storeName).clear();

tx.oncomplete = () => resolve();

tx.onerror = (e) => reject(e.target.error);

});

}

}

Tying It All Together in the SDK Integrated storage: Restore queue on init, save events to both queue and DB, flush on batch size hit. After flush, clear DB and re-save remaining.

class AnalyticsSDK {

constructor({ endpoint, batchSize = 5 }) {

this.endpoint = endpoint;

this.batchSize = batchSize;

this.queue = [];

this.storage = new EventStorage();

this.restoreQueue();

}

async restoreQueue() {

this.queue = await this.storage.getAll();

}

async track(eventName, properties = {}) {

const event = { name: eventName, properties, timestamp: Date.now() };

this.queue.push(event);

await this.storage.save(event);

if (this.queue.length >= this.batchSize) {

this.flushWithRetry();

}

}

async flush() {

if (this.queue.length === 0) return;

const batch = this.queue.splice(0, this.batchSize);

const json = JSON.stringify(batch);

const compressed = await compressData(json);

const response = await fetch(this.endpoint, {

method: "POST",

headers: {

"Content-Encoding": "gzip", // tell server it’s compressed

"Content-Type": "application/json",

},

body: compressed,

});

if(response.status === 200) {

this.storage.clear();

}

}

async function compressData(data) {

const encoder = new TextEncoder();

const stream = new Blob([encoder.encode(data)])

.stream()

.pipeThrough(new CompressionStream("gzip"));

const chunks = [];

const reader = stream.getReader();

while (true) {

const { done, value } = await reader.read();

if (done) break;

chunks.push(value);

}

return new Blob(chunks);

}

async flushWithRetry(retries = 3, delay = 1000) {

for (let i = 0; i < retries; i++) {

try {

await this.flush();

return;

} catch {

await new Promise((res) => setTimeout(res, delay * (i + 1)));

}

}

}

}

Wrapping Up the Adventure There it is—a solid SDK foundation for tracking, batching, retrying, and persisting. Expand as needed; love hearing your tweaks!

References: